Spark Notebook Orchestration in Microsoft Fabric can be significantly improved by replacing traditional looping methods in pipelines with a Master-Worker pattern. Instead of initiating a separate Spark session for each notebook, this approach uses a single session to execute multiple notebooks in parallel through a structured DAG (Directed Acyclic Graph). This not only reduces cost and improves performance, but also enables better debugging, dependency management, and execution control; all from within a single master notebook.

Table of Contents

When orchestrating Spark notebook executions in Microsoft Fabric, developers often resort to looping through notebook activities within the pipeline. However, a more efficient and scalable method is to use a Master-Worker model, where a master notebook handles the orchestration and coordination of worker notebooks directly at the Spark session level.

This method reduces overhead, improves concurrency control, and enhances debugging while also lowering the cost of Spark compute by avoiding redundant session initialisation.

Why use the Master-Worker Model in Fabric?

Traditional approaches to Spark Notebook Orchestration, such as executing notebooks sequentially or in parallel via pipeline activities (e.g., ForEach with notebook activities) can lead to redundant Spark session initiations, increasing latency and costs. The Master-Worker pattern addresses these challenges by offering the following capabilities:

- Run multiple notebooks concurrently with custom concurrency limits

- Manage dependencies and execution order using JSON-based Directed Acyclic Graph (DAG)

- Optimise Spark resource usage through central orchestration

- Provide better observability via hierarchical execution views in the monitoring hub

- Handle and propagate errors clearly to the pipeline level

For a deeper understanding of orchestrating notebook-based workflows in Fabric, refer to Microsoft’s guide on using Fabric Data Factory Data Pipelines.

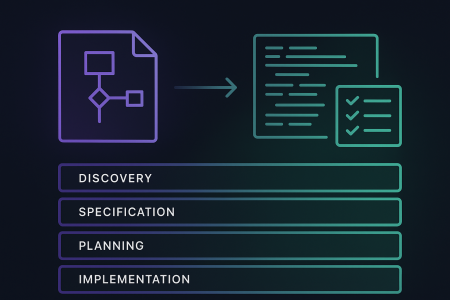

A step-by-step guide to Spark Notebook Orchestration

Simplified Sequence Diagram of Master-Worker Notebook Interaction

1. Metadata retrieval

Initiate the pipeline by fetching metadata configurations for the batch to process. This can be achieved by calling a Metadata Service endpoint or querying a Metadata DB, which returns a JSON payload detailing the entities to be processed.

2. Preprocessing

Perform preliminary parsing of the metadata within the pipeline to extract processing intelligence, such as identifying source-target pairs and determining the required notebooks for execution.

3. Call to Master Notebook

The pipeline calls the master notebook via a notebook activity passing in the JSON payload for all the entities to be processed and any other parameters that are required.

Upon receiving this payload, the master notebook parses it to determine the processes to perform, the source entities, and target entities.

4. Parsing and DAG creation

Within the master notebook:

- Parses the JSON to extract entity details and processing logic.

- Packages notebook execution steps into a DAG, specifying:

- Source and target entities

- The notebook to execute

- Parameters

- Timeout values

- Concurrency limit

5. Notebook Execution via runMultiple()

Using the DAG, the master notebook calls:

notebookutils.notebook.runMultiple(dag, {“displayDAGViaGraphviz”: False})

This method handles:

- Concurrent notebook execution

- Respecting dependencies and timeouts

- Queuing notebooks based on cluster capacity*

*Depending on the limit of the cluster capacity some notebooks would be executed and some would be queued up to the concurrency limit while the rest would be in waiting.

6. Execution result handling

As the worker notebooks complete execution, they return their output to the master notebook signalling success or failure. If a worker notebook fails, the master notebook fails and bubbles up the error to the pipeline.

For detailed information on runMultiple, refer to Microsoft’s documentation on Microsoft Spark Utilities (MSSparkUtils) for Fabric.

Example: DAG creation

Here’s a simplified code snippet showing how to package notebooks dynamically into a DAG based on metadata:

# Assuming the configs are maintained as sorted lists in JSON payload

activity_list = []

for i in range(0, process_config.get("entity_count",0)):

source_entity_config = source_entity_list[i]

target_entity_config = target_entity_list[i]

process_config = process_list[i]

activity = dict()

# activity name must be unique

activity["name"] = f'{source_entity_config.get("source_name")}.{source_entity_config.get("schema_name","unknown")}.{source_entity_config.get("object_name")}'

activity["path"] = process_config.get("notebook_name")

activity["timeoutPerCellInSeconds"] = process_config.get("cell_timeout")

#notebook parameters

notebook_params = {

"p_job_id": p_job_id,

"p_source_entity": json.dumps(source_entity_config),

"p_target_entity": json.dumps(target_entity_config

}

activity["args"] = notebook_params

activity_list.append(activity)

# Formulate DAG

dag = {

"activities": activity_list,

"timeoutInSeconds": max_timeout, #max timeout for entire DAG

"concurrency": batch_count # max number of notebooks to run concurrently

}

returned_value = notebookutils.notebook.runMultiple(dag, {"displayDAGViaGraphviz": False})

Implementing Notebook Orchestrations across layers

Beyond individual job execution, the master notebook can also orchestrate full data flows across layers (e.g., Bronze → Silver → Gold). Here’s a hardcoded DAG example for such orchestration:

# run multiple notebooks with parameters

DAG = {

"activities": [

{

"name": "CustomerExtraction", # activity name, must be unique

"path": "BronzeNotebook", # notebook path

"timeoutPerCellInSeconds": 90, # max timeout for each cell, default to 90 seconds

"args": {"source_table": "Sales.Customer","target_table": "Bronze.Customer"}, # notebook parameters

},

{

"name": "OrderExtraction", # activity name, must be unique

"path": "BronzeNotebook", # notebook path

"timeoutPerCellInSeconds": 90, # max timeout for each cell, default to 90 seconds

"args": {"source_table": "Sales.OrderHeader", "target_table": "Bronze.OrderHeader"}, # notebook parameters

},

{

"name": "CustomerTransform", # activity name, must be unique

"path": "BronzeNotebook", # notebook path

"timeoutPerCellInSeconds": 90, # max timeout for each cell, default to 90 seconds

"args": {"source_table": "Bronze.Customer","target_table": "Sales.Customer", "load_type": 'SCD2'}, # notebook parameters

"dependencies": ["CustomerExtraction"]

},

{

"name": "OrderTransform",

"path": "SilverNotebook",

"timeoutPerCellInSeconds": 120,

"args": {"source_table": "Bronze.OrderHeader", "target_table": "Sales.OrderHeader", "load_type": 'SCD1'}

"dependencies": ["OrderExtraction"]

},

{

"name": "DimCustomerLoad", # activity name, must be unique

"path": "GoldNotebook", # notebook path

"timeoutPerCellInSeconds": 90, # max timeout for each cell, default to 90 seconds

"args": {"source_table": "Sales.Customer","target_table": "Sales.DimCustomer", "load_type": 'Upsert'}, # notebook parameters

"dependencies": ["CustomerTransform"]

},

{

"name": "FactOrderLoad",

"path": "GoldNotebook",

"timeoutPerCellInSeconds": 120,

"args": {"source_table": "Sales.OrderHeader", "target_table": "Sales.FactOrder", "load_type": 'Upsert'}

"retry": 1,

"retryIntervalInSeconds": 10,

"dependencies": ["CustomerTransform","OrderTransform"] # list of activity names that this activity depends on

}

],

"timeoutInSeconds": 43200, # max timeout for the entire DAG, default to 12 hours

"concurrency": 2 # max number of notebooks to run concurrently, default to 2

}

notebookutils.notebook.runMultiple(DAG, {"displayDAGViaGraphviz": False})

Refer to the official runMultiple documentation for full details on syntax, retries, and visualisation options.

Integrating with DevOps Practices

For organisations looking to integrate Spark Notebook Orchestration into their DevOps workflows, consider exploring Arinco’s insights on Pipeline Deployment Order in Microsoft Fabric, Azure IaC DevOps Series: Build, and Azure Fabric Resource Deployment with PowerShell and REST APIs. These resources provide valuable guidance on managing deployments and infrastructure as code within the Microsoft Fabric ecosystem.

Conclusion

Implementing the Master-Worker model in Microsoft Fabric is a scalable, cost-effective, and resource-optimised strategy for managing notebook execution. It reduces Spark session overhead, simplifies dependency handling, and enables better monitoring and debugging.

Whether you’re orchestrating one batch or building out layered data pipelines, this pattern is a reliable foundation. Spark Notebook Orchestration in Fabric isn’t just efficient, it’s essential for teams looking to modernise their data engineering workflows.

What can I do next?

- Start small, try refactoring a pipeline to use a master notebook for execution.

- Template your metadata, make use of structured JSON for flexible orchestration.

- Use the monitoring hub to validate success and visualise execution.

Contact Arinco to learn how we can help you optimise your data workflows with Microsoft Fabric and implement efficient notebook orchestration strategies.

About the Author

Lackshu is a Data Architect and Data Engineer specialised in Microsoft technologies. Lackshu has 30 years experience in IT with the last 15 years focused on Data Platforms, Data Engineering and Data Analytics.