I’ve seen a few people share a screenshot this weekend of a post on the OpenAI subreddit from someone who realised they were spending $1200 a month on OpenAI for “simple” tasks like email filtering and transformation. They switched the model to gpt-4o-mini and brought it down to $200 a month.

The internet laughed. I saw plenty of hot takes, with people noting that a “real” engineer would have run these simple tasks on a cheap VPS or serverless function, at a cost of anywhere from $5 a month all the way down to pennies.

Perhaps more importantly, people were taking this as “proof our jobs are safe”, noting that this level of inefficiency is not sustainable.

This is completely the wrong take.

Why the laughter misses the point

There are several problems with all these hot takes, not least among them that nearly every aspect of what they are calling out is wrong. Let’s examine some of these observations.

It’s not pennies

There’s a cost to setting up regex pipelines, servers, or serverless functions. We love to repeat the story about how you pay for the years it took to learn how to do it, not the minutes it took to do the work. That means for these non-engineers, there’s a consulting cost. And even if it’s just one day of engineering time, that’s about the same as this person’s entire year of AI usage at $200/month.

Opportunity cost matters

The person who set up this AI workflow got value immediately. Achieving that $5 a month efficiency means waiting for approval for a software project, finding and engaging a consultant, development cycles, deployment, testing, and finally actually seeing results.

And while they’re waiting for all of this, the value they would have gained from that $200/month, or even the $1200/month option for that matter, is lost.

Time to value is one of the hardest things for enterprise to deliver, and that’s why shadow IT emerges in the first place. A working solution, that delivers value now, beats a “more efficient” or “better engineered” solution every time.

Costs are falling fast

The very post we are mocking here illustrates this better than I can put into words. They already dropped their cost to one sixth, and that’s now. A year from now that $200 workload will likely cost $20, and a year after that, pennies.

Laughing at the cost today ignores the curve.

Value matters, not cost

If they can afford it, and it delivers business value, it’s worth it. The waste isn’t in “overpaying” for a working system, the waste is in the time, money, and effort that too often gets sunk into things that never deliver value at all.

Why it matters to enterprise

At first glance, this might look like a small-business problem: a few hundred dollars a month in API bills. But the dynamics are familiar, and the consequences are bigger in enterprise:

- Compliance: regulatory obligations mean “just throw a regex at it” isn’t acceptable. Auditability, retention, and explainability matter.

- Governance: shadow AI usage creates risks. Centralising and monitoring workloads is non-negotiable.

- Integration: email filtering doesn’t exist in a vacuum. It must connect securely with CRMs, ticketing systems, and data lakes.

- Resilience: an API experiment that works for one user doesn’t scale to tens of thousands without hardening.

Granted, no organisation is going to turn down a saving of $1000 a month, but the real cost to larger enterprises isn’t API tokens consumed, it’s unmanaged risk, poor integration, and brittle prototypes that don’t survive first contact with scale.

Instead of trying to figure out how to convince the people spending $200 a month the “right” way, we should be trying to figure out how to compete with that pace of value delivery. Maybe it’s an opportunity to learn something, rather than to teach something.

The right role for AI in the enterprise

None of this means that larger enterprises are frozen out of these new opportunities. There are practical ways we can all innovate with AI, even in the most stringent of governance-lead organisations.

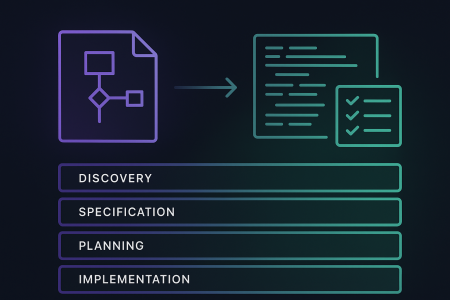

Firstly, AI is an excellent prototyping tool. It can prove quickly that something is possible, demonstrate value, and get an idea in front of stakeholders.

This can overcome one of the biggest hurdles to approval – the classic chicken and egg problem of demonstrating value without budget, in order to secure the budget.

Once you have a solid POC, engineers can then turn the prototype into a platform. That means wrapping it in security, scaling it to enterprise workloads, ensuring compliance, integrating it with core systems, and reducing cost over time through automation.

This is a proven pattern. We already do it with spreadsheets, Access databases, and shadow SaaS. AI just accelerates the prototype stage.

Short-term opportunities for enterprise engineering teams

I mentioned above that instead of wasting our energy trying to disprove the value the original poster found, we should be focusing on how we can add value instead. Here are some practical, immediate opportunities:

- AI usage audits.

Help organisations discover where AI is already being used, often outside official channels, and provide a path to lower costs, reduced risk, and better governance. - Productised migration.

Build approaches to take ad-hoc AI workloads and migrate them into enterprise-ready systems. That might mean replacing direct API usage with scripted automations, or wrapping models in services with observability, retries, and billing controls. - Governance and compliance frameworks.

Define standards for how AI prototypes move into production. This ensures innovation isn’t stifled, but risks are managed.

Long-term opportunities

- Prototype fast, harden later.

Encourage teams to use AI for proofs of concept, but set clear expectations that successful prototypes will be engineered into sustainable products. - Enterprise AI enablement.

Focus less on building individual use cases and more on providing platforms, guardrails, and shared services that allow any team to safely experiment and scale. - Cost efficiency at scale.

Falling model prices will reduce the “sticker shock” of AI usage. The value will come from engineering the systems around AI that optimise spend across thousands of use cases.

The bigger picture

We have to face a few harsh truths here. First of all, it only takes one savvy non-engineer to ask ChatGPT – “hey, how can I make this process more efficient?” With modern tooling and agentic AI, that $5 a month serverless function will be up and running for them in no time.

The Reddit example shows us something important: non-engineers are already comfortable building AI solutions, even when they’re inefficient. In enterprise, that’s both a risk and an opportunity. Left unmanaged, it’s shadow IT all over again. Harnessed correctly, it’s the fastest route we’ve ever had from idea to impact.

Just as consumer devices reset expectations for enterprise software a decade ago, AI is resetting expectations for enterprise speed today.

In the early 2010s our stakeholders started to see how huge technology companies could deliver rapid iterations of not just software, but hardware too. Designed for humans, not spreadsheets.

And they started to question, rightly, whether our stubborn refusal to replace their greenscreen terminal with a more intuitive and, dare I say it, delightful UI, was truly justified.

And guess what? They won that time. And they’ll win this time too.

Our role as engineers is not to laugh at the “inefficiency.” It’s to provide the path from prototype to platform. That’s how we protect the organisation from risk, while giving it the speed and value it increasingly demands.